從今天起,我們將實地建立英文到中文的翻譯神經網絡,今天先從語料庫到文本前處理開始。

在這裡我們由公開的平行語料庫來源網站選定下載英文-中文語料庫:Tab-delimited Bilingual Sentence Pairs

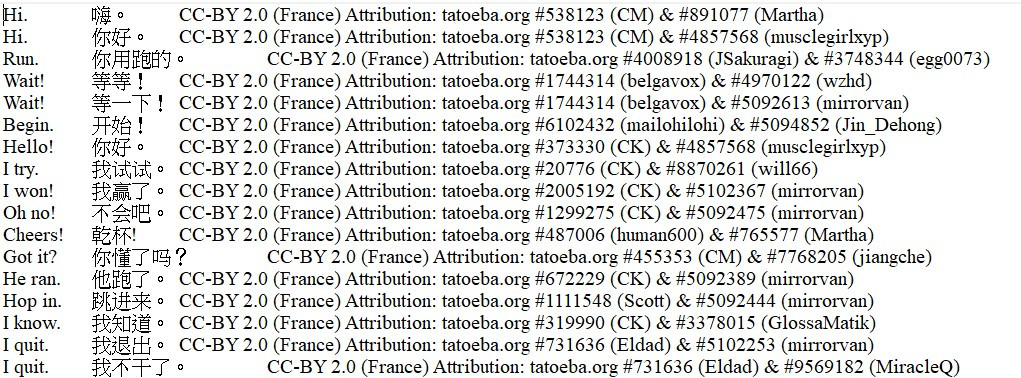

原始語料庫檔案如下:

首先,我們載入語料庫檔案,並以 list 物件來存取每一列文字:

import re

import pickle as pkl

data_path = "data/raw_parallel_corpora/eng-cn.txt"

with open(data_path, 'r', encoding = "utf-8") as f:

lines = f.read().split('\n')

我們將每列的句子依照不同語言分開,並且進行前處理(小寫轉換、去除多餘空白、並切開將每個單詞與標點符號),最後加入文句起始標示 <SOS> 與結尾標示 <EOS> 。由於書寫習慣的不同,中文和英文的前處理需要分開處理(或是英文-德文等相同語系就不必分開定義兩個函式):

def preprocess_cn(sentence):

"""

Lowercases a Chinese sentence and inserts a whitespace between two characters.

Surrounds the split sentence with <SOS> and <EOS>.

"""

# removes whitespaces from the beginning of a sentence and from the end of a sentence

sentence = sentence.lower().strip()

# removes redundant whitespaces among words

sentence = re.sub(r"[' ']+", " ", sentence)

sentence = sentence.strip()

# inserts a whitespace in between two words

sentence = " ".join(sentence)

# attaches starting token and ending token

sentence = "<SOS> " + sentence + " <EOS>"

return sentence

def preprocess_eng(sentence):

"""

Lowercases an English sentence and inserts a whitespace within 2 words or punctuations.

Surrounds the split sentence with <SOS> and <EOS>

"""

sentence = sentence.lower().strip()

sentence = re.sub(r"([,.!?\"'])", r" \1", sentence)

sentence = re.sub(r"\s+", " ", sentence)

sentence = re.sub(r"[^a-zA-Z,.!?\"']", ' ', sentence)

sentence = "<SOS> " + sentence + " <EOS>"

return sentence

接著將每一列文字分別存入 seq_pairs 中:

# regardless of source and target languages

seq_pairs = []

for line in lines:

# ensures that the line loaded contains Chinese and English sentences

if len(line.split('\t')) >= 3:

eng_doc, cn_doc, _ = line.split('\t')

eng_doc = preprocess_eng(eng_doc)

en_doc = preprocess_cn(tgt_doc)

seq_pairs.append([eng_doc, en_doc])

else:

continue

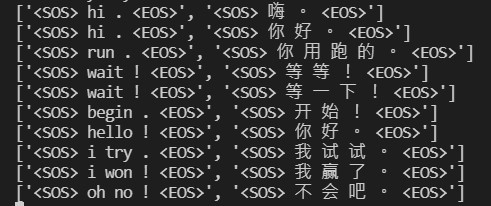

我們檢視一下前十筆經過前處理的英文-中文序列:

在正式建立資料集之前,我們將前處理後的雙語分別寫入 pkl 檔,以便後續建立資料集時直接使用,且可以依自己的喜好指定語中文或英文為來源或目標語言。

# Save list seq_pairs to file

with open("data/eng-cn.pkl", "wb") as f:

pkl.dump(seq_pairs, f)

在此次的翻譯實戰中,我將中文設定為輸入語言(來源語言)而英文設定為輸出語言(目標語言)。

假設今天在另一個程式中,我們可以從 pkl 檔案讀取出剛才處理好的字串,並且依照來源語言以及目標語言寫入文件中:

# Retrieve pickle file of sequence pairs

with open("data/eng-cn.pkl", "rb") as f:

seq_pairs = pkl.load(f)

# text corpora (source: English, target: Chinese)

src_docs = []

tgt_docs = []

src_tokens = []

tgt_tokens = []

for pair in seq_pairs:

src_doc, tgt_doc = pair

# English sentence

src_docs.append(src_doc)

# Chinese sentence

tgt_docs.append(tgt_doc)

# tokenisation

for token in src_doc.split():

if token not in src_tokens:

src_tokens.append(token)

for token in tgt_doc.split():

if token not in tgt_tokens:

tgt_tokens.append(token)

今天的進度就到這邊,明天接著繼續建立訓練資料集!各位小夥伴晚安!